Few things are more frustrating than being neck-deep in a mix, then realizing that two tracks are occupying the same frequency space. No matter what you do, you can't seem to unscramble these instruments — they just keep covering each other up.

In this post, we'll explore frequency collisions — those irritating overlapping frequencies — and give you seven ways to deal with them.

What Are Frequency Collisions?

Frequency collisions occur when similar sounds compete for the same sonic space in your mix.

Because there's only so much frequency bandwidth to go around, the overlapping frequencies in the competing sources obscure each other. This is known as frequency masking.

This masking phenomenon not only makes it difficult to separate the various components of your mix, but it also yields a muddled sound that screams "amateur." Even if you're aiming for a lo-fi aesthetic, you should still be able to hear what's going on in your mix.

What Instruments Mask Each Other?

Every mix is different. That said, there are certain combinations of instruments that seem to be problematic in just about every mix.

- Kick and Bass — These two instruments are embroiled in a serious love/hate relationship; when they complement one another it's pure magic, but when they clash things tend to get ugly.

- Electric Bass and Electric Guitar — Basses are lower-frequency instruments, but the upper-midrange presence of basses and guitars exist in a similar frequency space.

- Acoustic Guitars and Hi-hats — The pick attack of an acoustic guitar can sound a lot like a hi-hat; thus, one can affect how we perceive the other.

- Electric Guitars and Electric Pianos — Rhythm guitar parts and Rhodes or Wurlies can really mess with each other.

- Snare Drums and Guitars — The frequencies that give girth to snares and guitars occupy the same frequency space.

- Lead Vocals and Acoustic Pianos, Acoustic Guitars, and Electric Guitars — The voice is a multifaceted instrument; just about anything that occupies the midrange frequency spectrum is bound to cause problems.

- Background Vocals and Pads — Since background vox and pads are both supporting elements in a mix, they often mask one another.

- Synth Patches with Other Synth Patches — Synth patches with similar textures are guaranteed to interfere with each other.

Headroom and Why it Matters

When a mix element becomes masked by another element, you may feel compelled to increase the level of the obscured element to make it audible.

This will not work!

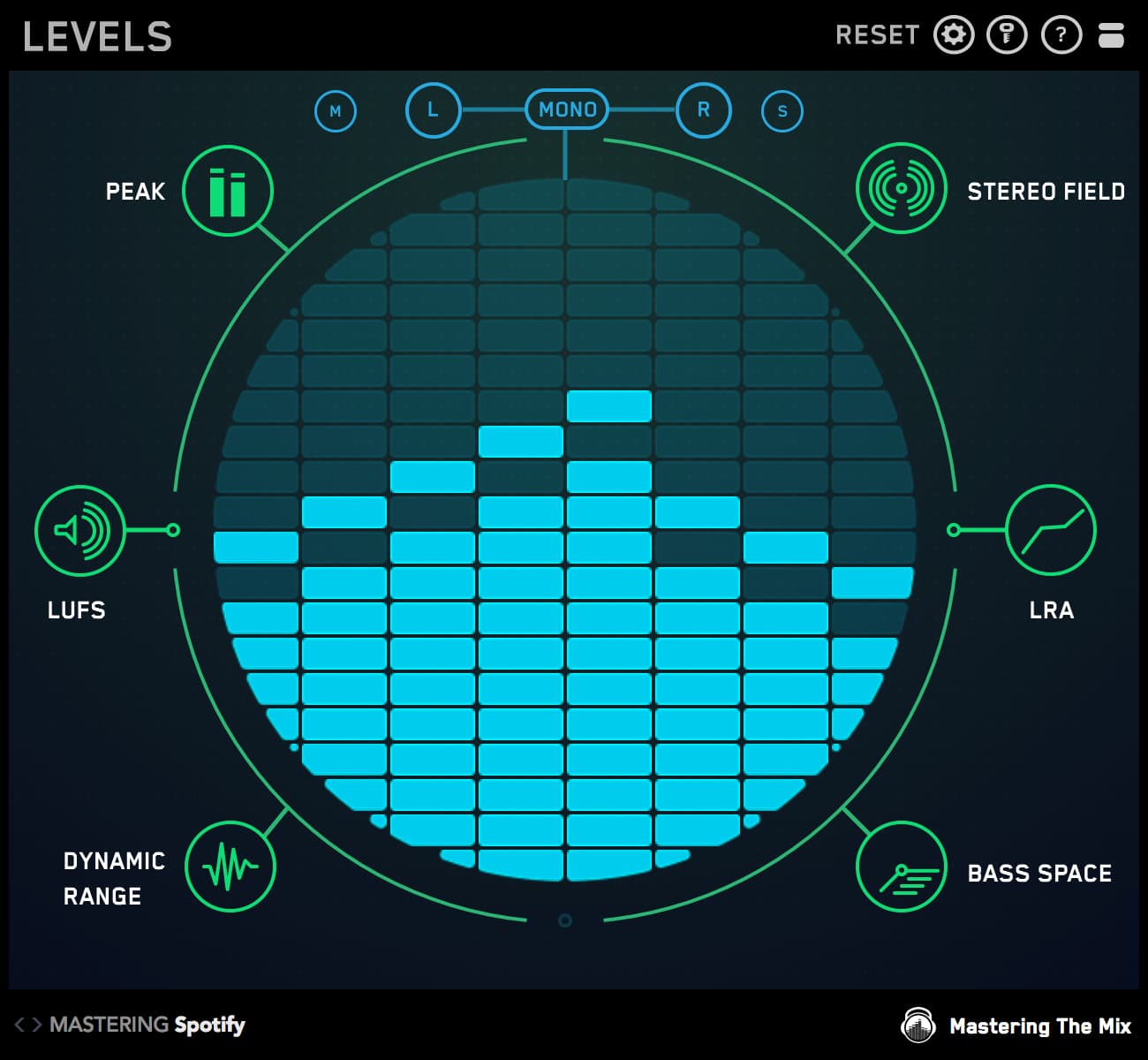

This won't work because there's a finite amount of headroom available in the digital realm. Headroom is — to put it simply — the difference between your track's highest peak and 0dBFS (dB Full Scale).

When you hit 0dBFS you run out of bits. This causes clipping, which in turn causes nasty digital distortion.

It's crucial that you remain aware of the headroom that's available to you while you're mixing. After all, every track in your mix is fighting for space.

If you increase the level of an obscured track until it becomes audible, you'll mask the track that was originally masking the first track. Then, you'll need to increase the level of that track.

This pattern will then continue until you run out of headroom. That's why increasing levels isn't the best solution to fixing frequency collisions.

So, what’s the right solution? Read on.

#1 — Use a Balanced Arrangement

Many frequency collisions occur simply because of an unbalanced musical arrangement.

There's a reason why most rock and pop ensembles employ the same elements: lead vocalist, rhythm guitar, lead guitar, drums, and bass. By virtue of arrangement alone, each of these elements occupies its own spot in the frequency spectrum, yielding you a balanced-sounding mix.

Therefore, when you're piecing together your composition, it's important that you use instruments that complement one another, rather than instruments that compete with one another. This will prevent frequency collisions from occurring during the mixing process.

With the right arrangement, your project will practically mix itself!

#2 — Deploy Highpass and Lowpass Filters

Instruments are made up of many frequencies. These frequencies are a huge part of what gives each instrument its distinct sound.

Not all frequencies will be relevant in the context of your mix, however.

For example, the lowest frequencies of a vocal track, while present in the real world, may not be adding anything to your mix. Conversely, they may be interfering with your other tracks.

The same could be said for the uppermost frequencies of a distorted electric guitar or synth patch. These frequencies could be obscuring high-frequency instruments, such as cymbals.

The solution is to deploy highpass and lowpass filters. Most commonly used parametric equalizer plug-ins, including the one that comes bundled with your DAW, contain these types of filters.

Highpass Filters

A highpass filter is your weapon of choice for taming unwanted low frequencies, and you'll want to employ it on any track that doesn't contain an abundance of low-frequency information, which is oftentimes everything except your kick drum and bass.

Start with a cutoff frequency around 40Hz with a gentle 6–12dB slope. Slowly increase the cutoff frequency until your track sounds thin, then back off until it sounds right.

After applying highpass filters to the appropriate tracks, you'll find that your mix's lower-frequency instruments sound much clearer.

Lowpass Filters

A lowpass filter removes frequencies above a defined cutoff frequency, while allowing those below to pass. This enables you to attenuate (lower the level of) high frequencies on any track with excessive high-frequency content.

Deploying a lowpass filter in the 10kHz–15kHz range is an easy and effective way to tame out-of-control high frequencies. Slowly decrease the cutoff frequency on the offending track until you can clearly hear the high frequencies of the obscured track(s).

A word of caution: be gentle! Using a lowpass filter too aggressively will turn your track into a muddy mess.

Also, for best results, adjust your filters in the context of a full mix. What sounds great isolated could sound terrible with the entire mix playing.

#3 — Fix Unwanted Resonances

Resonances are caused by a buildup of frequencies within your tracks. Unwanted resonances not only suck the dynamics and headroom out of your mix, but they'll also create unpleasant artifacts that can cause all kinds of frequency collisions.

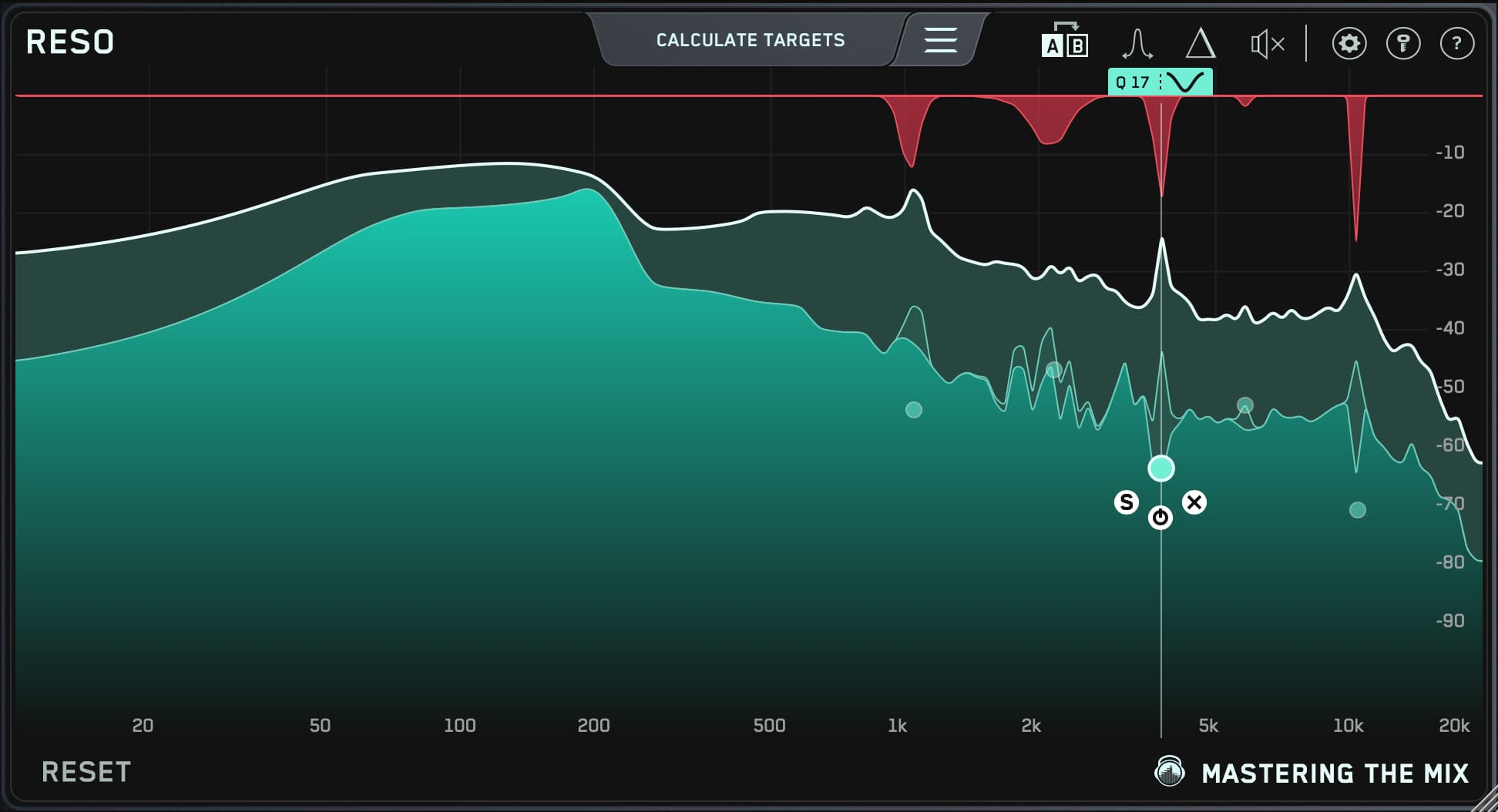

The traditional way to resolve unwanted resonances is to instantiate a dynamic EQ plug-in on a track, create a large boost with a narrow Q bandwidth, then sweep around the frequency spectrum and listen for any annoying frequencies. After that, you lower the gain on the problem frequency band until the unpleasant artifact disappears.

The easy way to eliminate unwanted resonances is with our RESO plug-in. RESO is not only an effective solution for ridding your track of unwanted resonances, but it also does it automatically, without time-consuming EQ sweeps.

Simply place the plug-in on any track, click the Calculate Targets button, and RESO does the rest. RESO not only supplies you with Target Nodes for getting rid of the resonances, but it also provides you with helpful setting suggestions for optimal results.

#4 — Boost Unique Frequencies

If you're trying to blend similar-sounding tracks, such as a DI'd bass guitar and a miked-cab bass guitar, you can carve out space by boosting unique frequencies on each track.

Say you've EQ'd the primary DI'd track by boosting 100Hz, 800Hz, and 5kHz. On the secondary miked-cab track, avoid boosting anything below 800Hz to avoid low-frequency buildup, then boost 900Hz and 3kHz.

Since you're boosting different frequencies, you should be able to give each track a unique enough personality to minimize the unwanted effects of their clashing frequencies. Keep in mind, however, these are general frequency suggestions; do what sounds best for your individual mix.

#5 — Use Complementary EQ

Besides boosting different frequencies, you can also employ complementary EQ.

Start by identifying which specific frequencies are colliding in each of your tracks. After that, boost the offending frequencies slightly in the first track (the one you'd like to highlight most), then cut the same frequencies on the second track.

Using complementary EQ is audibly perceived as though you'd increased the level of the first track and decreased the level of the second track; however, you won't be eating up precious headroom by pushing up faders.

After all, you're not actually increasing the level of a track; this is just a perception. What you've actually done is move colliding frequencies out of the way to create space.

#6 — Employ Sidechain Compression

Another way to resolve frequency collisions is with sidechain compression. Instead of trying to resolve the similar frequencies, this technique enables you to automatically adjust the volume of two similar signals so that both remain audible.

Sidechain compression differs from the standard variety, in that instead of triggering gain reduction from the audio signal that passes through the compressor, it employs a secondary source to trigger the process. Thus, when the secondary signal exceeds the compressor's threshold, the compressor applies gain reduction to the main signal.

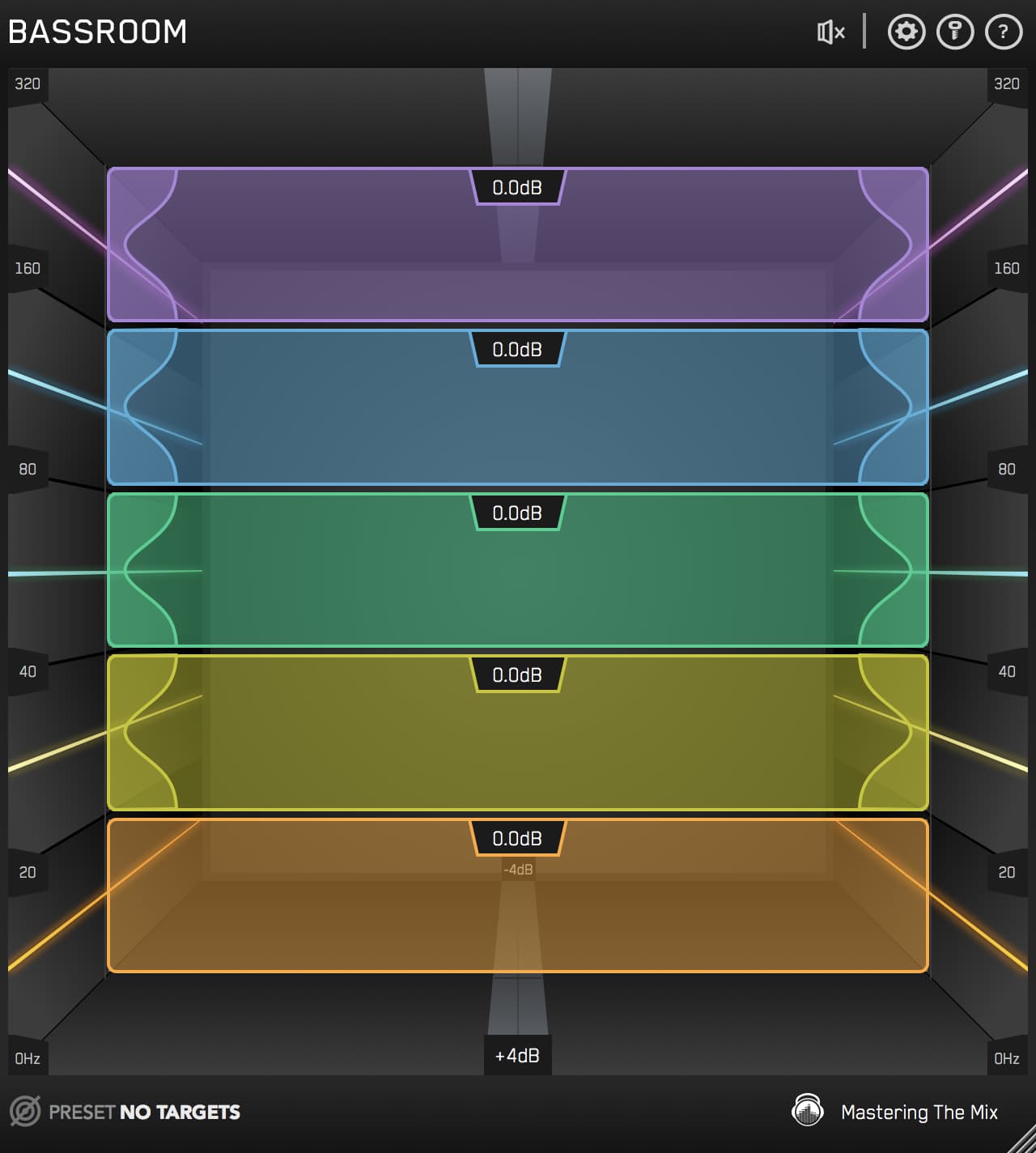

Probably the most common use for sidechain compression is to untangle a kick drum and bass. You do this by placing a compressor on the bass track, then feeding your kick track into the compressor's sidechain.

When you do this, every time the kick sounds, the compressor will automatically lower the level of the bass. Provided you use conservative settings (2–4dB of gain reduction is usually sufficient), you won't even hear the compressor working.

Sidechain compression works in many scenarios, however, it's easiest to deploy when one source contains lots of transients, and the other source is long and sustaining. Along with the aforementioned kick and bass, snare drums and guitars are a perfect example of this.

#7 — Throw Distortion on It

Another way to resolve frequency masking is to add distortion or saturation to one of the tracks. The increased harmonic content, especially in the upper midrange, will add presence to a track, without increasing its level.

That said, while saturation and distortion will add midrange presence to your track, it's important that you don't get carried away — an overly distorted track will actually lose its presence and definition.

Our ANIMATE plug-in is tailor-made for infusing your tracks with character and color. Its four different movement modes, each with individual frequency assignments, will inject any source with lots of ear-grabbing sizzle — without damaging its impact.

Conclusion

Frequency collisions and frequency masking occur in just about every mix. Thus, you'll never master mixing until you learn how to solve these common irritants.

Our blog is chock-full of useful information we've gained from decades of hard-earned studio experience. Keep following for more helpful tips and tricks!